Duration 23:15

Pytorch NLP Model Training & Fine-Tuning on Colab TPU Multi GPU with Accelerate

Published 2 May 2021

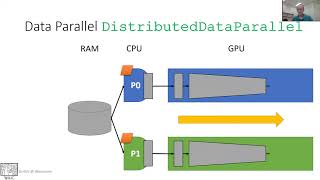

In this NLP Tutorial, We're looking at a new Hugging Face Library "accelerate" that can help you port your existing Pytorch Training Script to a Multi-GPU TPU Machine with quite lesser change to your code. As the description says, "🤗 Accelerate was created for PyTorch users who like to write the training loop of PyTorch models but are reluctant to write and maintain the boilerplate code needed to use multi-GPUs/TPU/fp16. 🤗 Accelerate abstracts exactly and only the boilerplate code related to multi-GPUs/TPU/fp16 and leaves the rest of your code unchanged." 🤗 Accelerate also provides a notebook_launcher function you can use in a notebook to launch a distributed training. This is especially useful for Colab or Kaggle notebooks with a TPU backend. Just define your training loop in a training_function then in your last cell, add: Hugging Face Accelerate - https://github.com/huggingface/accelerate Google TPU Colab for Pytorch Model Training - https://colab.research.google.com/drive/1FdsGYsS4Qx67__A3o4q8hTpRH5BHcvzi?usp=sharing The same can also be used on Kaggle Notebooks (TPU) or on Google Colab like demoed in the Video.

Category

Show more

Comments - 7